Paper Feed: June 2025

Highlighting research I find interesting and think may deserve more attention (as of 06/03/25) from academia, government, or the AI safety community.

Evals

-

BountyBench: Dollar Impact of AI Agent Attackers and Defenders on Real-World Cybersecurity Systems

Andy K. Zhang, Joey Ji, […] Daniel E. Ho, Percy Liang (2025) -

Cross-domain time horizons

Thomas Kwa (2025) -

System Card: Claude Opus 4 & Claude Sonnet 4

Anthropic (2025) -

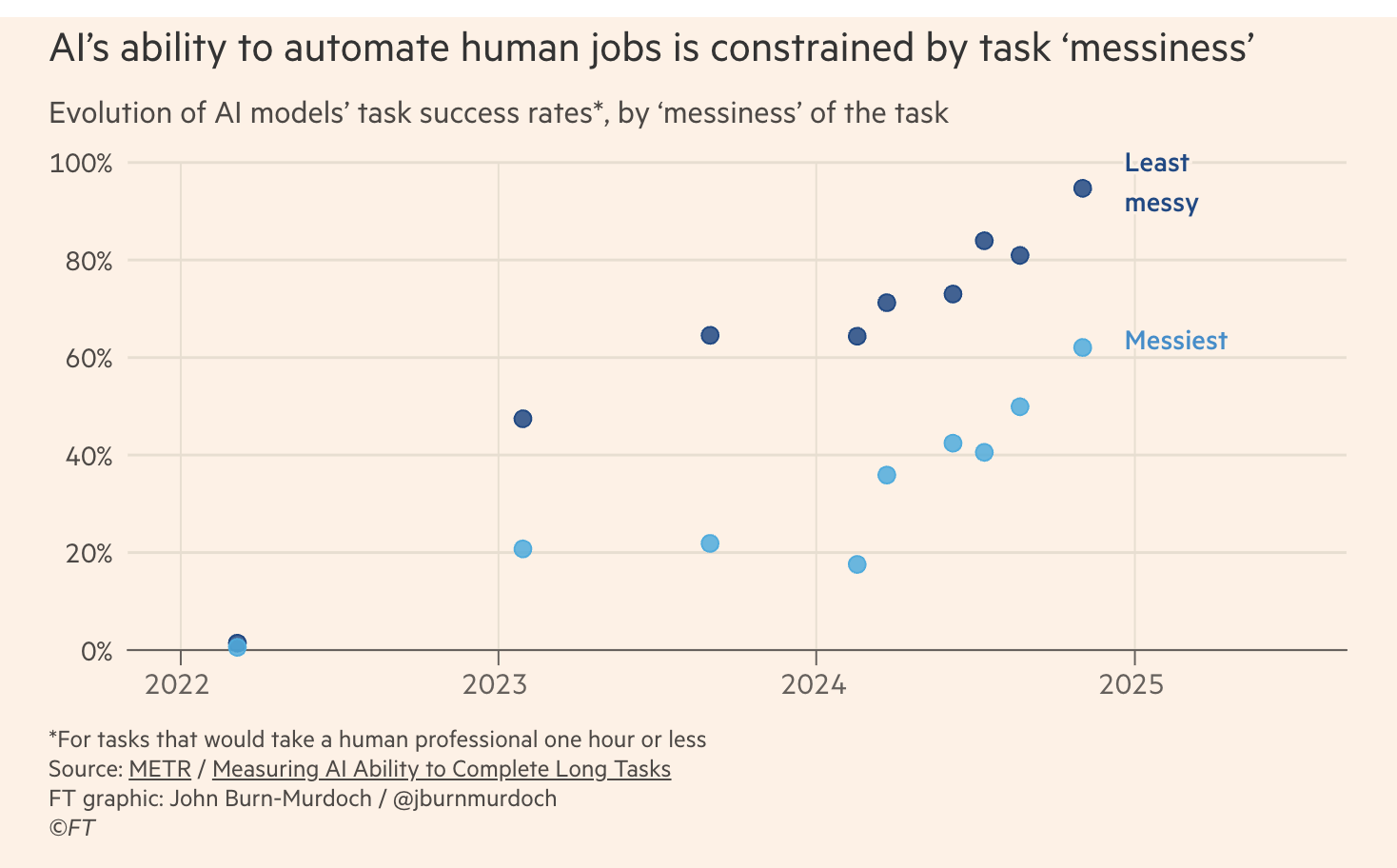

Why hasn't AI taken your job yet?

John Burn-Murdoch (2025)

Why this is notableThe general trends-- but not the offsets-- between messy/not messy tasks are roughly similar (plot below, created from Figure 9 in METR paper). 'Messy' tasks are tasks more like those found in the real world, as measured by some features (e.g., "from a real life source" or "potential for irreversable mistasks") designed to have real-world relevance. This is a nice framing. However, the factors (Appendix D in Measuring AI Ability to Complete Long Tasks) are somewhat ad-hoc and model-centric. For example, the factors "self-modification required" or "self improvement required" are relevant things to test for but aren't really 'messy.' It would be great if messiness were made more coherent and rigorous.

-

Claude Just Refereed the Anthropic Economic Index. Reviewer 2 Has Thoughts

Andrey Fradkin and Seth Benzell (Apr 21, 2025)

Why this is notableA common complaint is that professional economists do not typically take trends in AI seriously. I've only listened to a few of the most recent episodes, but I've found this series on the economics of AI useful to understand the perspective mainstream(ish) economics.Some relevant excerpts from this podcast:Excerpt 1:

This is a nice point - how do usage patterns change when they've just released a new model? Are we seeing a fundamental change in the usage patterns or mostly more of the same? Is it a slow drift or a sharp discontinuity? There are so many questions to answer with this type of data, but not necessarily economic ones.Excerpt 2:

Seth: What I make of this is that the title of this paper should just be "Which Tasks Are Performed with AI," not "Which Economic Tasks." It's not clear what makes a task economic. In my opinion, a task is economic if it's either some sort of Robinson Crusoe economy where even if I'm not interacting with anyone, this is an economic behavior because I'm building a thing that I'm going to use, or what makes something economic is that I'm participating in a market with this thing and I'm going to buy it and sell it after I go through these steps."My video game is crashing cause I only have eight gigabytes of RAM" doesn't sound like either of those. It sounds like this guy is troubleshooting his consumption, which maybe could be thought of as the consumer taking on some of the job of customer service. The other example, "Can you make sure this blog post follows Chicago style?" - if I'm making an artistic or creative project that I'm just putting out on the internet for people, again, I'm not sure I would call that economic activity. So no problems with this paper being about measuring what activities or tasks people do with AI, but I think it's probably a breach too far to call these economic tasks.Andrey: I think I agree with you. There needs to be more metadata around these conversations. A survey of whether users are using this for their job or not could be really informative, or even just a subset analysis of just the pro users who are more likely to be using this for their job.I do think it's an interesting phenomenon of substituting professional labor with personal labor. Hal Varian used to bring up this example all the time with YouTube - before, you'd hire someone to repair your appliance or do work around the house, but now you can watch a YouTube video and do it yourself. This means YouTube is generating tremendous economic value that's not being measured. I think both of us are generally on board with that idea - GDP is going to miss a bunch of interesting activity just by virtue of how it's measured. But especially for an academic contribution, we want a more rigorous analysis.

Science of DL / Interpretability

-

Beyond Gradients: Using Curvature Information for Deep Learning

Juhan Bae (2025) -

Explaining Context Length Scaling and Bounds for Language Models

Jingzhe Shi, Qinwei Ma, Hongyi Liu, Hang Zhao, Jeng-Neng Hwang, Serge Belongie, Lei Li (2025) -

Towards eliciting latent knowledge from LLMs with mechanistic interpretability

Bartosz Cywiński, Emil Ryd, Senthooran Rajamanoharan, Neel Nanda (2025) -

Structural Entropy Guided Agent for Detecting and Repairing Knowledge Deficiencies in LLMs

Yifan Wei, Xiaoyan Yu, Tengfei Pan, Angsheng Li, Li Du (2025)

Technical AI Governance

-

TOPLOC: A Locality Sensitive Hashing Scheme for Trustless Verifiable Inference

Jack Min Ong, Matthew Di Ferrante, […], Johannes Hagemann (2025) -

The First Compute Arms Race: the Early History of Numerical Weather Prediction

Charles Yang (2025)

Why this is notableI liked this a lot. Would be great to see the scaling trends pulled out to today.

Compute / Scaling / Reasoning

-

Response to: How Fast Can Algorithms Advance Capabilities?

Ryan Greenblatt (2025)

Why this is notableThis is a response to "How Fast Can Algorithms Advance Capabilities?" by Henry Josephson (Epoch AI). -

Which innovations actually change the exponent in the scaling power law?

Thread 1,

Thread 2

Katie Everett (2025)

Why this is notableAlso see this related Beren Millidge write-up that also gives an intuition for power-law scaling arising from (something like the eigenvalues of the covariance matrix of) the training dataset. It seems spiritually true, to me: The Scaling Laws Are In Our Stars, Not Ourselves -

Why We Think

Lilian Weng (2025)

Why this is notableLog-loss is useful in CoT search because these can be seen as sampling over the posterior from a latent variable model. "The fundamental intent of test-time compute is to adaptively modify the model's output distribution at test time." -

Parallel Scaling Law for Language Models

Mouxiang Chen, Binyuan Hui, Zeyu Cui, Jiaxi Yang, Dayiheng Liu, Jianling Sun, Junyang Lin, Zhongxin Liu (2025) -

Predicting Empirical AI Research Outcomes with Language Models

Jiaxin Wen, […], He He, Shi Feng (2025)

General Safety

-

On the Feasibility of Using LLMs to Execute Multistage Network Attacks

Brian Singer, Keane Lucas, Lakshmi Adiga, Meghna Jain, Lujo Bauer, Vyas Sekar (2025) -

Logicbreaks: A Framework for Understanding Subversion of Rule-based Inference

Anton Xue, Avishree Khare, […], Eric Wong (2024) -

LLMs unlock new paths to monetizing exploits

Nicholas Carlini, Milad Nasr, Edoardo Debenedetti, Barry Wang, Christopher A. Choquette-Choo, Daphne Ippolito, Florian Tramèr, Matthew Jagielski (2025)

Miscellaneous

-

AI, Materials, and Fraud, Oh My!

Ben Shindel (2025)